I worked as a “pure” sysadmin (sorry - ”DevOps Engineer”) for around 2 years. It was never a role I intended to stay in long, but during that time, I learned a lot. I wanted to sum up some of the “obvious” systems things I learned in that timespan.

Over that 2 years, I’ve been putting together point-form notes for when I eventually leave that role, of do’s and don’t’s that I learned on the job. It’s been a slow transition into other roles (EG “shifting left” into development), so I still have my hands in a lot of systems stuff. Nevertheless, I’d say that my actual sysadmin days are over. That means it’s time to polish and publish the list!

These are primarily things that weren’t on my pre-sysadmin radar, or things I changed my mind on. Many of these are very basic things that I needed some real-world pain or perspective to get on board with. If you’re a new sysadmin, or are a developer not responsible for systems yourself, this is probably worth a read. Comment with some of the “duh” lessons that you learned from experience!

Bash Sucks

I should say that I know it’s cool, and I know there’s a lot of powerful tricks. I’m certainly not saying you should never use it, but to think twice before scripting.Bash is the goto language - even among many non-sysadmins - for building simple scripts/CLIs. As soon as those scripts become more complicated, Bash becomes hard to work with, and requires a lot more tricks and checking to perform the tasks at hand.

I’ve consistently seen Bash scripts become huge sunk-cost issues, when making the smallest changes is difficult. Chances are if you want a dozen or so “just works” lines of code, you should look at Python or something else. My personal rule of thumb is “if it’s anything more than a command sequence, and you don’t need Bash utilities, don’t use it”.

Don’t Use SSH Programmatically

Programmatic SSH calls (using SSH from a program to execute a remote command) are extremely tempting. There’s a good chance you’ve done it between systems, to kick off a job, fetch/manipulate files, or start/stop services. Sure, it’s “bad” and “ugly”, but it works, right? Kind of, and with a lot of costs.I say it “kind of” works because it can stop working very quickly. Exec calls are fairly reliable, but most people defer to using Bash calls for this, either due to comfort, language restrictions, or desiring Bash features at the same time.

Bash is notoriously difficult to throw data around in, due to the fun of string escaping (especially with multiple levels). If you’re dealing with things like database dumps or key/certificate contents, you’re liable to wrangle with quoting or escaping issues. These commands become unwieldy to write, as you want to perform more and more actions on the remote system. What if your control flow is conditional, for example? Are you going to write one-line bash scripts to run over the connection? Additionally, if transferring confidential data, you have a substantial risk of it being logged by the system. For example, auditd is often configured in production environments to log the contents of exec calls. If you’re having to use a password, or echo’ing/tee’ing/piping data into something else… beware.

To top it all off - it’s incredibly insecure. You run a big risk letting one system access another at the command level, even if the user isn’t privileged. If a downstream system is compromised, it can get to the upstream system. This is an especially unacceptable risk if the downstream system is of lower security.

An alternative to making SSH calls is adding task-specific API endpoints. To use a very common example, suppose you have an in-place multi-step deployment process (fetch sources, compile, start servers) that needs to be remotely initiated. You could make it a small endpoint that accepts necessary parameters (such as what software version to use), and performs the logic and commands itself. If the downstream controller is compromised, all it can do is redeploy the app - it can’t get in and control the system.

Decouple Secrets From The Source Immediately

This seems really basic - and it should be. But back in 2015, not everyone (like me) was doing this, and certainly not pre-production.Just like the rule “you should always set up a test framework and a hello-world test right away”, you should figure out a secret injection strategy by the time you have your first secret. It becomes far more difficult to justify this work later, especially when you have tens to hundreds of random secrets in the source code/config, in an undocumented fashion.

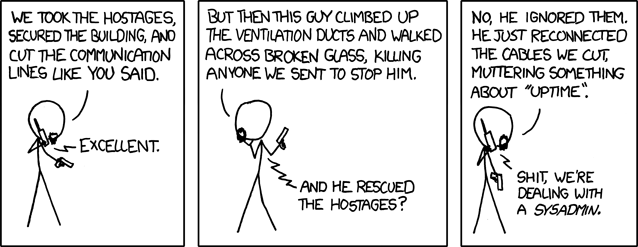

Uptime Isn’t Your Key Metric

Sysadmins are all about uptime, right? That was certainly the only metric I had my sights on early in my career.

XKCD "Devotion to Duty", https://xkcd.com/705/

Your ultimate responsibility is to keep the system as usable as possible, and the data/users as secure as possible. Keeping the system online is a key component of this. However, you will need to handle trade-offs of effort, and directly competing concerns.

Your time will often be better spent shoring up security, or ensuring developer productivity, than adding another 0.01% of uptime. SLOs (see: site reliability engineering) are key for deciding the value of uptime over other work.

Performing your core responsibilities will also mean causing avoidable downtime, such as rebooting for kernel updates.

Focus on Recovery Times

Adding “more 9s” of availability is great, but has rapidly diminishing returns, and even the biggest and best companies will have failures occur at some point. Users will be much more forgiving of short blips than extended stretches of downtime. Minimizing the MTTR (mean time to recovery) is at least as impactful as minimizing the incident frequency, and is usually far cheaper. Invest in self healing systems, easily swappable backups, and on-call staff, to name a few key assets.Decentralize Dependence

This ties in closely with a more specific anecdote of system design: “choreography over orchestration”. That is to say, systems that self-manage independently are far more resilient to failures than systems that are centrally managed.Aside from dynamic systems, decentralizing dependence is a good principal to adhere to. For example, if you have a central master SQL database, you’re going to be in big trouble when it goes down. Ideally, you wouldn’t use a system with a single master at all (though sometimes that’s a reasonable choice). But there’s ways that the impact can be mitigated:

- Set up a hot failover, allowing another SQL server to become the master if a failure occurs.

- Ensure read operations can still succeed on the other SQL servers.

- Design calls to skip unnecessary writes (such as recording statistics), or fail gracefully if writing is not possible.

- Use a queue or fallback database for vital operations. When the primary database goes back online, the fallback records should be re-integrated.

Any central component, be it a single server, or a virtual component like a cluster cluster, is at risk of going down. When something central must exist, either build the system in a way such that it can limp along while the central component is down, or have a fallback.

Aggregate Your Monitoring Data

This is another point that is so, so vital to me now, but took time to clue in on. Chances are, if you’re looking at a log, something is wrong. What happens if the log is inaccessible, due to a downed VM? How can I access its syslog or kernel log? What if the log was swamped with new entries, and logrotate erased the entries that you were looking for? Even worse, what if you’re running an orchestration system like Kubernetes, or a tool like Terraform? Deleting and replacing systems is a normal part of some infrastructure.Querying disparate logs also sucks, if the problem may exist across many systems, or if you don’t know exactly what instances are at fault.

You Need Disaster Playbooks

This ties in with the emphasis of MTTR in failure scenarios. They sometimes feel pointless to write, but they’ll save first responders time vital time, especially if those first responders aren’t the experts. Playbooks are also a way to make management/executives commit to a course of action, like “what level of security risk is required before we turn the system off”, or “how much data are we willing to drop to stabilize a thundering herd”.Key playbooks include:

- Triaging and contacting appropriate parties (“is this a sysadmin problem? is it a problem for dev team X?”)

- Recovering/replacing servers

- Restoring from backups

- Handling a data beach

- Handling a suspected network intrusion

- Vital infrastructure (DNS, IaaS, etc) degraded or offline

A good way to write more day to day playbooks is watch for the times that a specific person (yourself or someone else) had to be called upon, due to specialist knowledge about a broken system. That person should write at minimum a bullet-point playbook of debugging and taken/likely recovery steps. Next time it occurs, someone else should try using the playbook. If they can’t solve the problem quickly, the playbook definitely needs expanding.

Automation Isn’t Just About Rote Time Saved

There’s a lot of tables that engineers like to pull out, to show “if you do something X times and it takes Y time, you should spend at most X*Y time on it”. The perspective can help, but it’s reductive and ignores much of why we automate.Automation is about ensuring success. This means automation is desirable for:

- Time-consuming tasks (the obvious one).

- Tasks that need to be performed without delay.

- Tasks that are error-prone or complex to do correctly.

Tasks that you do infrequently are all the more important for their infrequency. Restoring backups is a great example - it rarely happens, and often it’s a calm use case like “someone wants some archived data”. But when a call goes out and a server is irretrievably down, that data needs to be fetched and redeployed immediately. Which leads me to…

Bad Automation Is Worse Than No Automation

I’ve used a lot of bad automation scripts (and written a good chunk of those myself).One of the worst mistakes I’ve made happened while using a simple automation script, which I found valuable for old servers. Let me explain the scenario: I had a script to handle the multiple steps involved in expanding a virtual hard drive. It’s a very time-sensitive operation, as it involves shutting down and restarting user-facing services. My script would sync files to the new disk, shut down all services, do a final sync & swap, then bring the services back online. When it worked fine, it meant just seconds of downtime. Unfortunately, one night I was tired and accidentally ran the script twice. It immediately hit a failure - the replacement drive wasn’t sitting at the ready anymore - but it chugged along rather than failing out. This wound up wiping some data, which was supposed to be the “old copy” but was in fact the only copy.

Your tool might be bad automation if…

- It doesn’t check resources/statuses before committing to a course of action.

- It doesn’t react to failures.

- It leaves things in a half-changed state.

- It’s hard to modify.

- It’s easy to misuse.

Counter-intuitively, automating easy cases is often better than automating hard cases. Hard cases may still be best with human judgement.

Never Rely On Your Firewalls

I’m going to start this point with the caveat that firewalls and IP-whitelisting are extremely important tools. There are oodles of real world situations in which authentication can be leaked, brute forced, or bypassed with an exploit. Firewall and whitelist everything you realistically can. Despite that importance, you should operate and design your systems’ security as if those firewalls didn’t exist.There are many ways in which an untrusted party can wind up behind your firewalls. They may exploit other software in a system that can access the target, and escalate the intrusion to the target. Something vulnerable (such as a Wordpress server, overly-open tu server, or coffee machine) may be unintentionally granted network access to the target via bad network configuration. Someone/something may accidentally drop a firewall. It’s not uncommon…

Additionally, it’s quite likely (especially if you’re working with monolithes or bundled services) that before long you’ll need to allow select 3rd parties access to an otherwise restricted system, such as 3rd party contractors, or a 3rd party integration without a fixed IP space. If you’ve designed the system to rely on network level access control, you risk overly exposing a system, and/or designing complex tools to manage access. Because of those considerations, you should never use trust-based or unencrypted authentication on any networked system (and ideally, not even within a single machine). In the rare case that secure authentication is not an option for a piece of software, you can adopt a networking tool such as stunnel to carry the connection more securely.

There’s A Reason Why Enterprise IT Is Slow

This was the hardest lesson for me to learn, and it took many readings of the PCI-DSS spec to fully appreciate. Security and agility are often opposing concepts, and when it comes to running systems, security has to win.Implementing automated and manual (you definitely need both) oversight is time consuming. All software going in to important systems needs to be vetted, which includes OS packages and updates. In a traditional system, that falls squarely on he shoulders of the production admins… AKA the sysadmins. You can kiss goodbye to hands-off auto-updating in prod.

Access logs need painfully regular monitoring, offended users need restricting, and sanity checks around network config and secured services need to be checked and re-checked… over and over again. There’s a rapid wall of maintenance, where eventually there’s no time for maintaining more instances and types of systems.