Disclaimer: I’m currently a chair of the new Kubernetes SIG-Usability. However, these are my own opinions. They’re not necessarily shared by the SIG, and don’t necessarily represent future changes in Kubernetes. I also may very well change my mind down the road - take this with a grain of salt if you read this 2020-onward.

I’ve been thinking a lot this year about the future of Kubernetes. There’s two big themes that I keep seeing in my own work and in the public space: operability friction (how easy is it to run), and customization friction (how much work is required to run the way you want).

Where We’re At

Kubernetes Is Meant To Replace Bespoke Platforms

"Kubernetes is a platform platform" is a common tagline.

It’s not the end goal, it’s something to let others build platforms on top of.

This is a very compelling concept -

take something off the shelf, and make minimal but high-impact changes for your business and use cases.

You get a fairly-custom system (EG conforms with your deployment process, IT concerns, application topology…)

without needing to build and maintain most of it yourself.

Dan Kohn gave an opening keynote on that topic at KubeCon Barcelona. In the mid 2010s, with the building blocks for orchestration (VMs & containers) readily available, almost every tech company above a certain size was building their own solution. Kubernetes is what it is today because as an industry, we shifted more engineering power into building a common tool, and put less power into home-growing orchestration stacks. I’m “one of those” open source people, because that’s the kind of collaborative spirit that gets me up in the morning. We can produce better tools, and waste less work on them as a whole, if we collaborate and share.

These two concepts have some friction. If it’s not meant to be a turnkey platform, Kubernetes users will need to customize their setup, which can be extensive.

Foundational Features First

A colleague recently expressed frustration at the lack of features compared to many big, company-internal equivalents (Borg, Titus, Tuperware, etc).

Suppose you want to show a person’s workloads. How do you even do that? Pod service account != “owner”… there’s no concept of resource ownership. Do you hack in some labels or annotations, make sure they propagate downward, and make sure all your Kubernetes tooling respects and protects them? This is a basic thing to have in any system, but not only is it a DIY feature, it’s a hard one.

Or what about security features? What about the fact that every pod in a cluster can talk to every other pod, and to change that, you need to add custom config and install a compatible 3rd party CNI provider or addon?

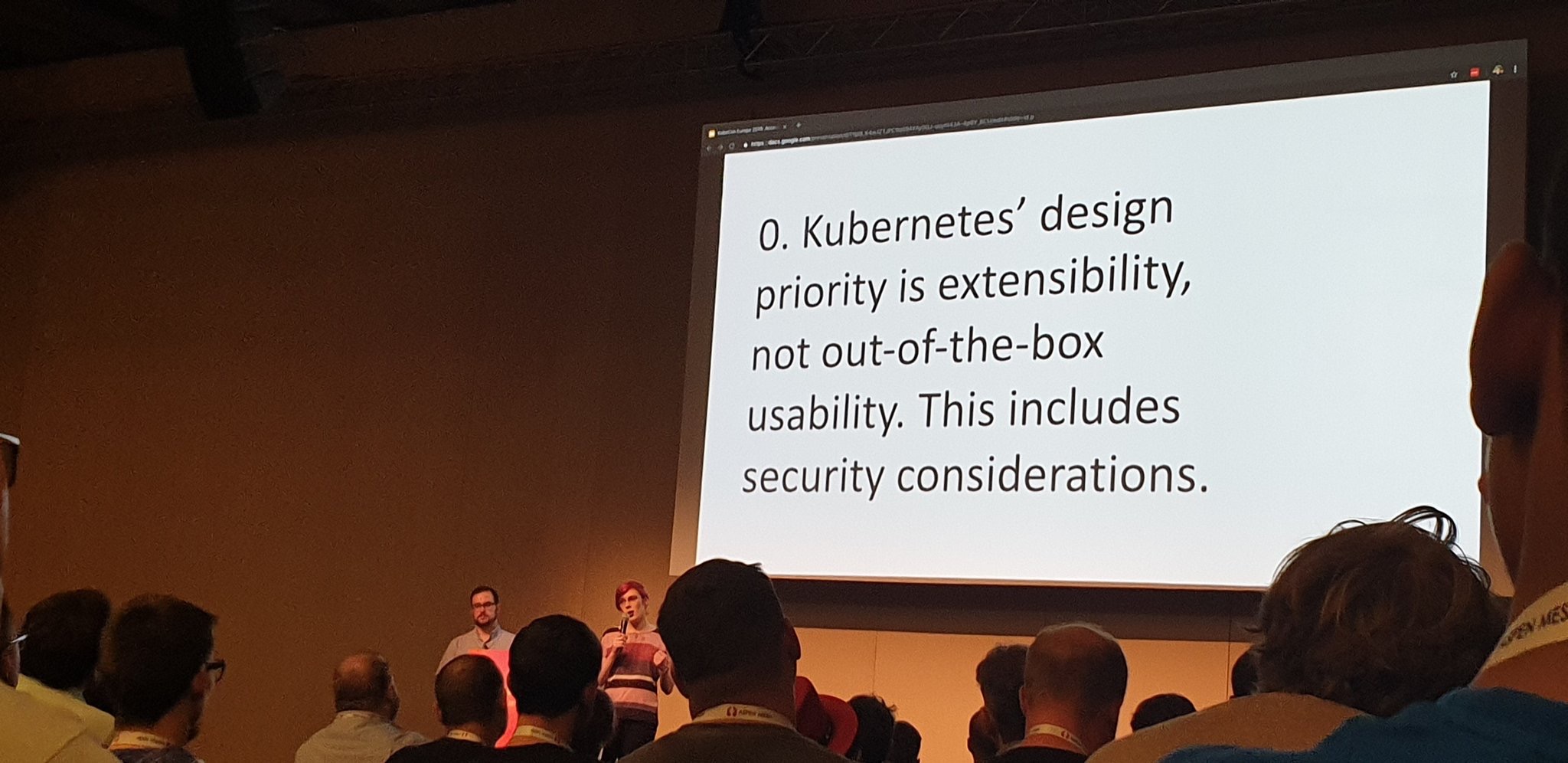

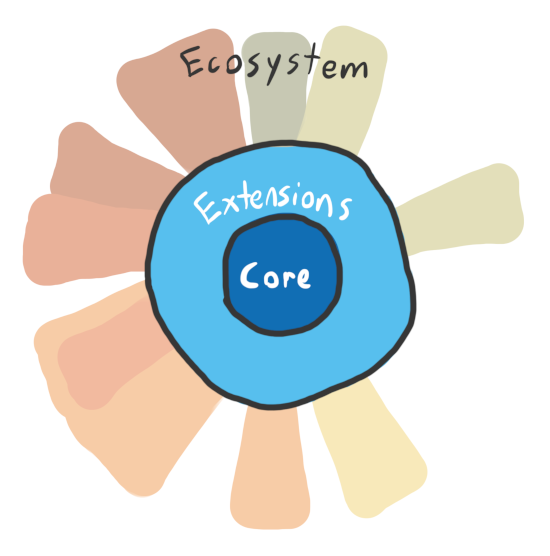

Kubernetes focuses on “foundational” features - the raw features you need for basic operation, and to build more complex features. In my opinion, it executes on this well. For example, CRDs are a breakthrough way to add custom concepts to Kubernetes, with potentially little code (only requiring custom userspace controllers, without deep integration).

There are base concepts, like pods and nodes and the scheduler, without which Kubernetes cannot function. (Note that this is my own classification, though what I would call a base concept does roughly line up with the core API group.)

Then, there are extensions, which have flourished in the more recent years as the core matured. This is resources such as Deployment, PodSecurityPolicy, and I would argue, Service. Common functionality that most users would need (with fairly standardized configuration).

This is where Kubernetes, very sharply, draws the line.

Anything more custom is to be implemented by someone else, be that another open source project, a vendor, or an end user.

Vendors And External Projects

It makes sense that custom requirements (or things that the Kubernetes team isn’t able to focus on) be bumped further downstream. However, this hasn’t manifested spectacularly, and to talk about that, I need to talk about the elephant in the room: vendors.

Don’t get me wrong, there’s a lot of fine folk working at vendors, and I suspect I’ll work at a few during my career. However, vendors have a goal: to make money. In the end, this is from running infrastructure, providing support, or by selling products that add new features/automation.

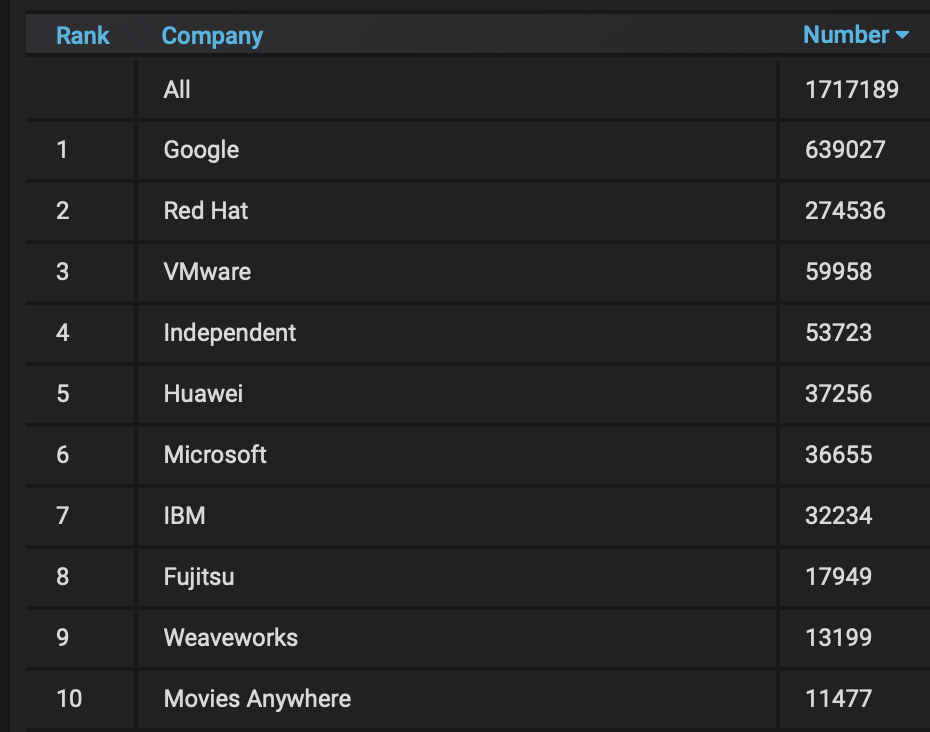

Kubernetes is mostly built by vendors. I pulled some contributor stats (September 2019), which clearly speak for themselves.

To be blunt, the organizations building Kubernetes have conflicting interests with the users of Kubernetes. This is not to accuse anyone of deliberate nerfing or backdooring. Rather, this creates a much more indirect problem of who gets funded to do what.

As a positive example, Azure is driving the recent enhancement to support IPv4/IPv6 dualstack, presumably because it lines up with Azure features and deals. This feature wouldn’t have been implemented in 2019 without their work.

As a negative example, running Kubernetes on bare metal sucks. That’s because the ability to run on bare metal isn’t something that the cloud providers care about… there’s no money in people who don’t use your platform.

Many vendors make money off usability gaps in Kubernetes, and that can create a twisted incentive to keep things that way. If bare metal sucks, running in the cloud is even more compelling. The more security features are lacking, the more users will pay for security products, and so on.

The over-representation of vendors is because few end users come to the table. And that’s a shame, because there’s a lot of them.

What’s Missing

I believe we, as a development community, fundamentally misunderstand the way Kubernetes is actually used. As a community, we point to the idea that someone downstream of us will fill in gaps. Don’t like the security gaps? Use a Kubernetes distro that fills them in. Want better cluster observability? Find a vendor.

Some of this is pragmatism - Kubernetes could not exist if we didn’t take a core-outwards approach. However, I think a lot of this comes from the vendor-dominated ecosystem. Things like secure defaults, easy admin tooling, clear monitoring… they’re not addons, they’re table stakes.

I pick on security a lot because it’s a critical, and near-universal example of “basics only” gone wrong. There are many ways a stock cluster can be trivially pwned. Everyone needs to install 3rd party software and custom settings, and a lot of engineering time across all end users is wasted in setting up and tuning the same things.

I’ll describe some key things that I think should be addressed with official (core Kubernetes or addon) solutions, rather than reinventing the wheel downstream.

Secure Defaults

A Kubernetes cluster should be reasonable secure until a user explicitly enables an unsafe option. Some examples:

- HostPath mounting should be disabled by default, and carefully controlled.

- Network access should be default-closed, not default-open.

- Secrets should be stored in a more secure way than base64 encoding.

Unsafe options and features should be obviously unsafe.

A (Lightly) Blessed Path

This is a hard ask, practically and politically, but the ambiguity of “what options and versions just work” is painful as a user.

Kubernetes has many 3rd party components (container runtime, network provider, ingress controllers, etc). They all have their own perks, quirks, and interoperability concerns with one another… especially when you look at versioning.

Shipping a default profile would help the vast majority of users with no special needs or opinionation.

A Better User Management Story

As highlighted above, Kubernetes isn’t really aware of users, beyond authorizing them. This touches on observations we had while researching for the Fine Grained Permissions In Kubernetes talk earlier this year. The ultimate permission boundary in Kubernetes is the namespace, and all tooling/conventions heavily rely on that. As soon as users cohabitate a namespace - let’s say because they’re both maintainers of the same service - it becomes harder to separate them.

There are things you could do with logging or mutating admission webhooks, to track who created/updated what, but that becomes less clear down the chain. For example, if I create a ReplicaSet, the system user (via the ReplicaSet controller) will be the one who actually creates pods. My action is obscured.

Correlated API Views

This is another topic I’ve ranted about before.

Kubernetes has a normalized API model. This sucks for operators and platform developers downstream, as they must build views out of that raw data themselves.

Some common views:

- Pods on a node

- This one has many varieties, like logs or host stats

- Permissions attached to a service account

- Permissions attached to a higher-level resource (EG Deployment)

- Pods using a service account

- Or owned by a specific user, if such a thing existed…

- Pods corresponding to a Service or Ingress

Octant is a neat 3rd-party tool to expose some kind of functionality, though I haven’t dug deeply into it yet.

More Intelligent Communication Between Components

Clusters can wind up in an odd state, due to problems that are “obvious”, yet the system fails to respond.

As an example: suppose you boot a worker node, which uses a newer API version than the control plane. As long as the node API stays compatible, the node will be marked Ready, pods will be scheduled, etc.

But, suppose the pod API has changes, and isn’t compatible. Uh oh! Those pods on the node? They can’t boot, and they’re stuck, because they won’t be rescheduled.

Or, what if the endpoint API changes? Pods on the node may not be able to properly use Services.

A situation like this is avoidable. Perhaps node readiness could explicitly check API compatibility. Or perhaps the scheduler could be aware of API compatibility (proactively or retroactively).