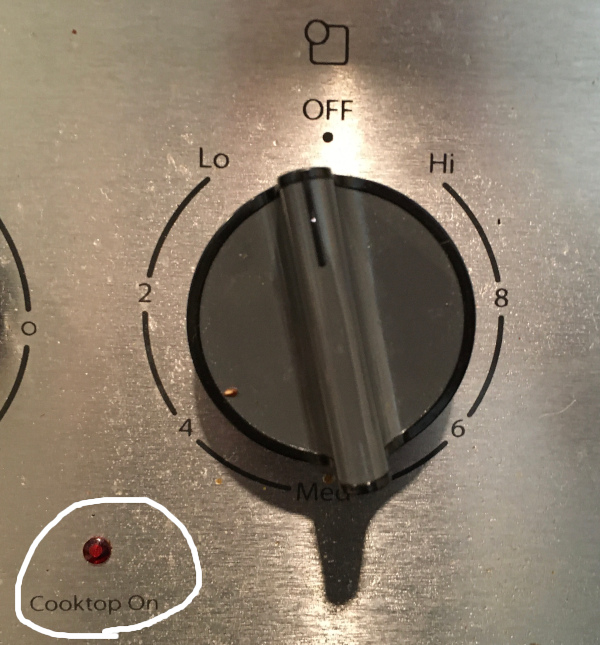

Recently, I noticed something odd in my kitchen. The “Hot Cooktop” light was on, and sure enough, one of the hotplates was warm. I was initially confused, having supposedly turned the stove off hours prior. The “Cooktop On” light was off, as I expected. Do you see what happened?

One dial was on, albeit at a very low setting. I confirmed by experimenting that the hotplate was on, even though the “Cooktop On” indicator does not light up until turned another few degrees.

The answer to “how could this happen?” was also somewhat obvious. The “Cooktop On” indicator circuit is either not sensitive at lower power levels, or is a completely distinct switch operated by the same dial. I can’t say without knowing more about the writing, which I don’t care to. Regardless of implementation: the light does not indicate what I would expect (“there is nonzero power going to a hotplate”).

This problem is a classic one in system telemetry.

Designers want to monitor when situation foo is occurring,

so they monitor output x.

Output x may be a good approximation of foo,

especially under standard test conditions,

but it is not the same thing.

Consider a common mistake in monitoring batch-like processes. Units of work are probably expected to be completed in a fixed time range, e.g. form digitization should be completed within $minutes, or containers launched within $seconds. We want to know if the system is stalled.

A common approach is to monitor the number of in-progress units, and alarm on an excessive number. This may approximate the desired effect, but it is prone to false positives and false negatives. Suppose that many units of work were created at once (over-inflating the in-progress count), or that only a single unit of work got “stuck”. I have seen others, such as alarming when no units of work are completed within a time frame.

The proper solution would be to monitor the lead time of all units. If any unit (or a higher number of units, depending on your SLOs) fails to complete in time, an alert is triggered.

This solution can also be more effective when breaking long units of work into distinct phases. Suppose we have a batch job that’s supposed to do something with the day’s data, and give a report to executives the next morning. We can say that we expect the work to be done by 7 AM. However, if we find out at 7 AM that a job has failed, we don’t necessarily have enough time to complete the job. Instead, we can break out distinct phases, e.g. scheduling/startup, fetching data, processing, and storing. If an individual phase fails to make progress, a person can be alerted much sooner. For example, we might say that we expect scheduling to happen within 20 minutes, and would page a human at 12:20 rather than at 7:00 if no compute resources are available. By doing this, we are effectively answering our question, “is the system making progress as expected?” (We would also hopefully have a robust system and more proactive monitoring, to reduce the chance of needing to wake a human up at all.)

I invite you to think of scenarios where your own monitoring does not properly address the health or failure that it is supposed to observe. There are some other areas where I have seen particularly frequent pitfalls:

- System health checks.

- Instance health checks.

- Failed requests.

- Average response times.

Oftentimes, these problems can be caught be asking very simple questions, around scenarios where a false alarm or hidden issue could occur.